DBcloudbin is designed for reducing your database size by moving binary content (those documents, images, pdfs, …) that many applications store at the database, to a optimized and cheap cloud object storage.

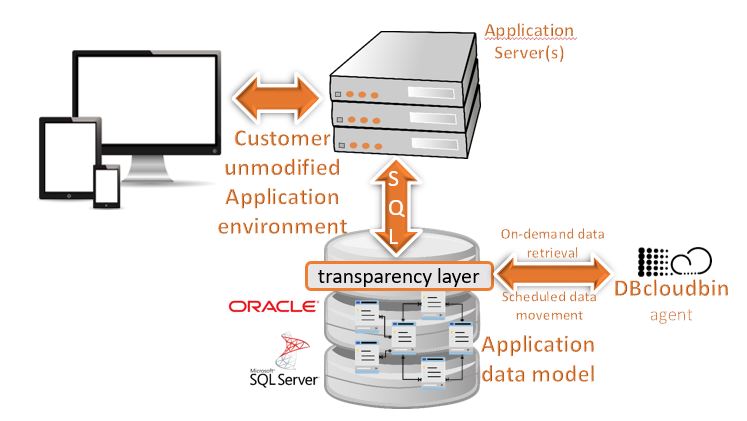

The nice part is that you don’t have to modify your application to do that. We create a transparency layer, based on your application data model to make it compatible with the SQL interaction from your application layer, while enabling you selectively to move content outside of the database, reducing dramatically its size (and, as a logical consequence, the infrastructure and operational costs). Check our detailed savings information and calculator.

There are two transparency models, called layered (default) or inline. Depending on your application characteristics and maintenance processes you may find more suitable one or the other. Check here for additional details.

Just download, setup & move your data to the Cloud!

DBcloudbin is simple and effective. You have to run the installer, identify the database schema of your application, select the tables with binary (BLOB) content that are suitable of moving to our object store and it is all set. We provide a CLI command (dbcloudbin) to execute the common tasks as executing archive operations (dbcloudbin archive). In this scenario, you just define the subset of your managed tables you want archive based on SQL logical conditions and (optionally) the -clean argument to erase the BLOB from the database freeing its consumed space. The data is securely stored in our Cloud Object Store and a reference of the location (as well as a encryption key) is saved in your database. When your application issues a SQL query (the same query the application did before dbcloudbin installation), if the data is present locally, it will be served locally; it if is stored at our Cloud Object Store, the database will silently fetch it from our system and serve it to the application, as if it were locally, just with a slightly higher latency. From a application perspective, no changes.

We take very seriously security, both from a data access/data loss perspective and from a data integrity perspective. Check our detailed information on security and data integrity.

Curious? Just check it!! In a nutshell, this is the process:

- Check out our trial and register for the service.

- We will provision 5GB for free for you in our Cloud Object Store, send you the link to our installer and the service credentials.

- Identify a non-production Oracle/SQL Server database and your application instance where you can test the solution.

- Run our setup wizard. It will do everything for you, including the transparency layer creation… Check for detailed setup instructions.

- Test your application to check that it keeps working as expected.

- Run the dbcloudbin archive command to move the subset of content that you decide to our Object Store. Check how the application behaves… it is transactionally consistent, you can do it with your application online!

Where you can use DBcloudbin?

We define the ideal scenario as:

- You are a company with large relation enterprise databases (currently we support Oracle and SQL Server; other RDBMS coming shortly).

- A relevant amount of content stored by application is binary content (documents, videos, pictures, ….) and is stored in the database.

- You have a non-production environment where you can test your application compatibility.

Not sure if your database meet the criteria? Run our RoI tool to check sizing of potentially archivable content and get the potential saving figures!

DBcloudbin Solution Architecture

Our architecture is neat and simple. All the complexity is hidden under the covers. We use a DBcloudbin agent (installed as a service in a Windows or Linux host or directly packaged in a virtual appliance in our Cloud marketplace offerings) to act as a bridge between your database and our Cloud Object Store service. A ‘dbcloudbin’ user and schema in your database will store the configuration settings. The installer will create your application’s specific transparency layer to be able to hold the identifiers of the content moved to DBcloudbin Object Store and keep it ‘SQL client compatible’ (so the existing queries, insert, update and deletes your application was issuing before installation, keep working when being issued trough the transparency layer). And that’s all!!

Doubts? keep reading…

What about performance?

Our experience demonstrates that, in many cases, the performance is even better after archiving your BLOB data. The reasons for that claim are:

- Insert operations go always to the database. Moving data to the cloud is an asynchronous, decoupled operation. No impact.

- Read operations of binary data (documents, pictures, …) are in most cases a ‘human action’ (e.g. an operator opening a document in a web interface). Human interactions are in a order of magnitude of seconds, where databases work at millisecond scale. A slightly higher latency you may suffer (providing you have a reliable and performant Internet connection) are almost imperceptible for the human interaction.

- Our solution is backed by a top-class Google Cloud Platform infrastructure with high-end, redundant and parallel service infrastructure. In on-premises scenarios, you should use a well implemented object storage appliance provided by one of our supported manufacturers.

- The dramatic reduction in size in your database after moving the ‘fat’ data to the object storage will produce, among other benefits, a much faster processing of those relational queries that do have real impact on performance (e.g. listing or filtering or reporting data in your application by different criteria). So the end-user will perceive a much more responsive application.

Who can see my data?

Only you :-). More precisely only your database schema and those database users you have allowed to do so. We don’t break the RDBMS security model, so your data keeps as secure as it was before. The same users and roles that had access to the data, will keep having access, and no other ones. When moving data to our Cloud Object Store service, we generate a secure random key to encrypt the content with a secure AES cipher on the fly, storing the key at your database table. So the content lands in our service ciphered, with no possible access to the key. In addition, the transmission channel is secured with an SSL connection.

So, yes, you still have to keep backing up your database; if you loose your database you loose your keys (and hence, your content). But baking up your database with a e.g. 80% reduction in size (the content reference is just a few bytes) is a much easier (and cheaper!) story.

I have a object store and want to keep on-premise

No problem, we have a pluggable architecture where our solution can talk with a variety of Object Store technology from the main manufacturers (Hitachi, Dell-EMC, IBM,…). We provide licenses for any of the supported object storage manufacturers, so your content keeps during the full lifecycle inside your data-center limits. Just contact us and tell us your requirements.

I have my own Cloud Service Provider

That’s fine. We can provide you the logic of the service and you provide the storage directly from your Cloud Service provider. In fact, we have pre-build virtual appliances available at the main Cloud providers (AWS, Azure, Google Cloud Platform). Check our marketplace offerings. If you have additional needs, please contact us and tell us your requirements.

Are you a service provider and want to include DBcloudbin service in your portfolio? contact and we will discuss further.